SynSense SPECK™

Our system is a fully neuromorphic localization ecosystem developed for the SynSense SPECK™, combining an event camera and System-on-Chip neuromorphic processor.

LENS is a compact, brain-inspired localization system for autonomous robots. Using spiking neural networks, dynamic vision sensors, and a neuromorphic processor on a single SPECK™ chip, LENS performs real-time, event-driven place recognition with models 99% smaller and over 100× more energy-efficient than traditional systems. Deployed on a Hexapod robot, it can learn and recognize over 700 places using fewer than 44k parameters—demonstrating the first large-scale, fully event-driven localization on a mobile platform.

Our system is a fully neuromorphic localization ecosystem developed for the SynSense SPECK™, combining an event camera and System-on-Chip neuromorphic processor.

LENS is a fully capable system for deployment on resource constrained robotic platforms for multi-terrain, multi-environment mapping and localization.

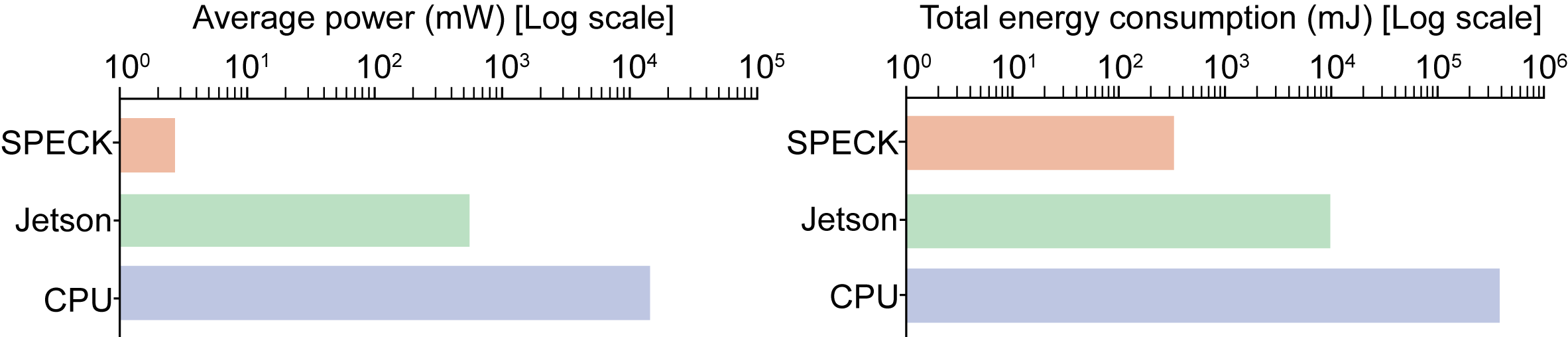

When deployed on neuromorphic hardware, LENS uses <1% the power required by conventional compute platforms.

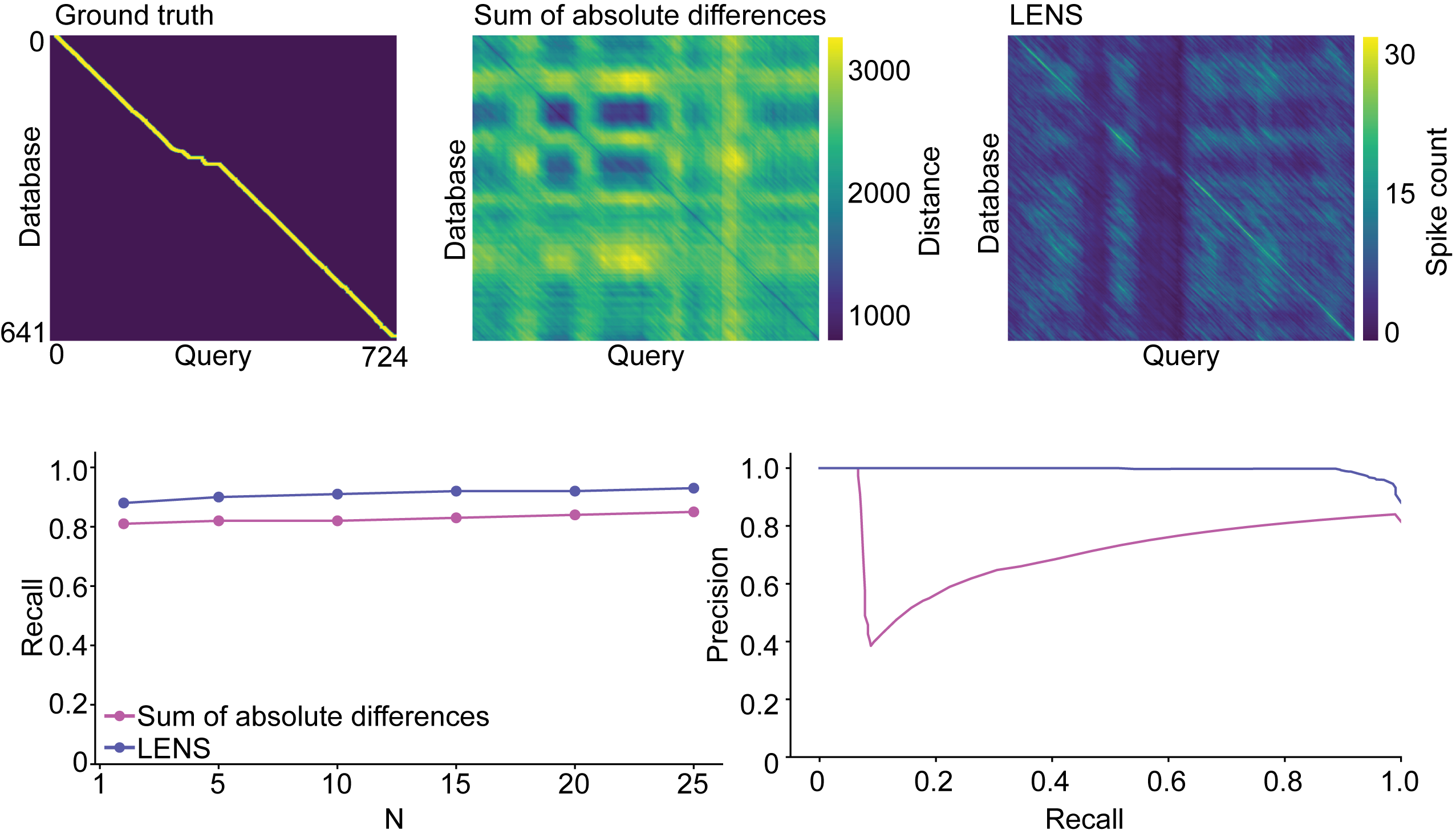

LENS shows impressive localization accuracy, outcompeting state-of-the-art VPR methods. With model sizes smaller than 180 KB with just 44k parameters able to map up to 8km.

LENS trains on static DVS frames of events collected over a user-specified timewindow. Temporal representations of event frames are efficiently trained in minutes for rapid deployment. Event counts create identifiable place representations through their unique, individual spiking patterns.

This work was developed as an extension to a variety of excellent work in robotic localization.

Sparse event VPR develops the concept of using small number of event pixels to perform accurate localization.

VPRSNN introduced one of the first spiking neural networks for visual place recognition, which inspired previous work for an efficiently trained and inferenced network VPRTempo, which was adapted for this work.

In addition to this, a lot of great work has been done in the localization and navigation field using neuromorphic hardware.

Fangwen Yu's work developed an impressive multi-modal neural network for accurate place recognition. Le Zhu pioneered sequence learning using event cameras through vegetative environments. Tom van Dijk deployed an impressively compact neuromorphic system on a tiny autonomous drone for visual route following.

@article{HinesLENS2025,

author = {Adam D. Hines and Michael Milford and Tobias Fischer },

title = {A compact neuromorphic system for ultra–energy-efficient, on-device robot localization},

journal = {Science Robotics},

volume = {10},

number = {103},

pages = {eads3968},

year = {2025},

doi = {10.1126/scirobotics.ads3968},

URL = {https://www.science.org/doi/abs/10.1126/scirobotics.ads3968}

}

}